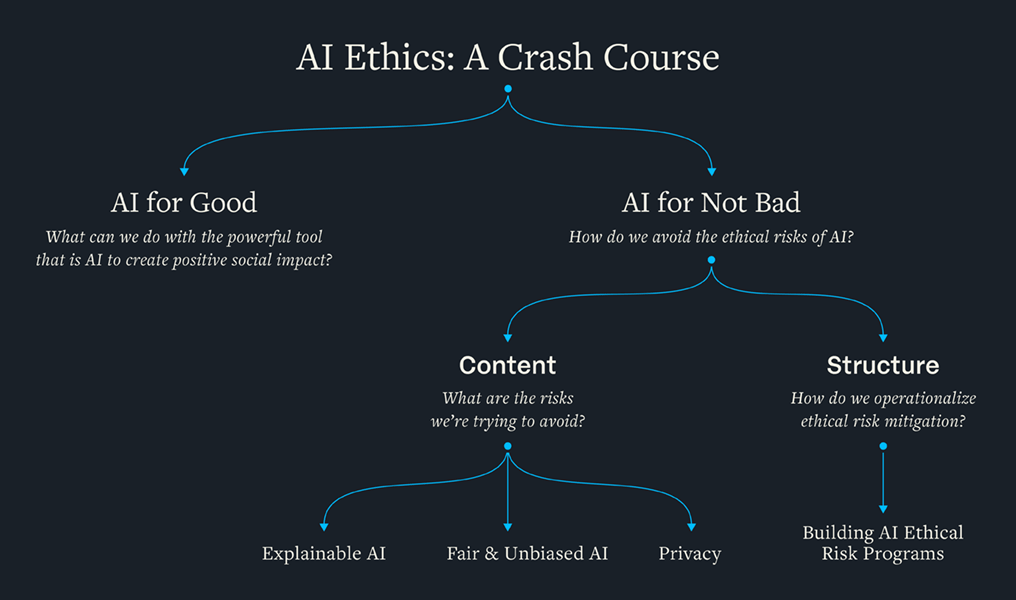

Crash Course on AI Ethics

If you don’t know much about AI ethics and you want a high level summary of the big issues, this is the page for you. If you like this and you want to go deeper, the book is the place for that (unless you want to bring it to your team, in which case a workshop might make sense).

AI’s Amazing Ability to Discriminate

At its core, AI does one thing: it learns by example.

The examples it learns from are often the things people have done a lot of, like discriminating against women and people of color. So your AI learns to discriminate against women and people of color. And since AI does things at scale at blinding speeds, you get discrimination at scale at blinding speeds.

The Problem isn’t the Bad Behavior of Engineers or Data Scientists

A lot of discrimination is the result of people with bad intentions. When it comes to AI, the intentions of the creators of the AIs aren’t the problem. They can even try really hard to create non-discriminatory AI and fail. That’s because the source of the discrimination – actually, the sources – can be very difficult to identify. And even once identified, even harder to mitigate.

The Real Source of the Problem

Let’s say your organization gets a bonkers amount of resumes submitted to HR every day.

You could have a small army of people combing through these resumes, but that’s time-consuming and expensive, so you decide to let AI have a crack at it.

First order of business? You get thousands of resumes you’ve received in the past and divide them into two piles: those that someone said deserve an interview and those that someone said did not. Then you give those resumes (labeled “interview worthy” and “not interview worthy”) to your AI and tell it to learn what interview-worthy resumes look like. Your AI – which is just fancy software that learns by example – “reads” all the resumes and so “learns” what an interview-worthy resume looks like. Excellent!

Now you give your AI new resumes, and it green lights some (“you get an interview!”) and red-lights others (“no interview for you!”). So, what did your AI learn?

It might have learned that your organization tends not to give interviews to women, so it red-lights women’s resumes.

You’ve got a biased AI on your hands.

It’s easy for this to get out of control. You give examples of “creditworthy” and “not creditworthy” applications, and you get…black people denied credit at a much higher rate than, say, white people.

You give your facial recognition software lots of examples of people’s faces but not enough examples of black women, and now your AI can’t recognize black women.

You give your search engine AI lots of examples of CEOs…and then your AI only shows pictures of men when you search for “CEO.”

And on and on and on.

The Data Scientists Can’t Solve this Problem By Themselves

You’d be forgiven for thinking that this is all a technical problem with the data (and in truth, it’s not just the data, but other things like how the “goal” of the AI is chosen). But while there is software that offers various quantitative analyses to help data scientists identify and mitigate the discriminatory effects of AI, that approach is far from sufficient.

You Need a Cross-Functional Team

I don’t care if you call it an ethics committee or panel or council or institutional review board or risk board or whatever: your AI ethical risk program needs one.

Yes, you need data scientists involved.

You also need – brace yourself – ethicists who can help everyone think through the various kinds of discrimination and the appropriate way to think about fairness for each particular use case of your AI.

And because we’re in business and not a non-profit social activist organization, you need people who have a deep understanding of the business needs related to the AI.

If you don’t involve a cross-functional team in how your AI is built to mitigate discrimination, you’re just hoping your engineers and data scientists can solve a problem in an area in which they have no expertise. That’s playing with fire both ethically and reputationally.

Want to learn more?

There’s a chapter dedicated to this in Ethical Machines. There’s also my Harvard Business Review article, “If Your Organization Uses AI, It Needs an Institutional Review Board.”

AI recognizes patterns that are too complex for our feeble human minds to grasp

AI denies people interviews, mortgages, and insurance. It fails to see the pedestrian crossing the street and the self-driving car crashes into them. It tells the fraud analyst to investigate this transaction and the doctor that this person has cancer.

When the stakes are this high, we need to know why our AIs are telling us these things. But with “black box” AIs it’s, well, it’s a black box: we don’t know why.

The power of AI to recognize complex patterns is a gift and a curse

What’s 13,254 x 957,395,211?

No? Can’t do that in your head? Even with a pen and paper, it’s difficult? But your calculator can do it in fractions of a second.

And that, in a very tiny nutshell, is why we have black box AI: we can’t explain how the AI arrives at its “decisions.”

You want your AI photo software to recognize all those pictures of your dog. You do that by uploading a bunch of pictures of your dog and labeling those photos “Pepe.” Your AI “learns” what Pepe looks like by looking at the thousands of pixels and the mathematical relations among those pixels in each and every photo. Then it finds the mathematical relations among all those pixels that mean, ruffly, “this is Pepe.

When you upload a new picture of Pepe, and your AI photo software says, “This is Pepe, too!” it did some insane math. It compared the patterns of pixels in this photo to all the others you uploaded and found a match. The calculations performed are far more complex than the relatively simple multiplication problem I gave you. It’s so complex, we mere mortals can’t even hope to understand it.

This is fine when we’re just talking about labeling photos. But when we’re talking about understanding why the self-driving car killed a person, why the diagnostic AI predicts this person has cancer, and why this person was denied a mortgage or bail, the stakes are much higher, and we need explanations.

Peering into the Black Box Can Be Imperative

Contrary to popular belief in the AI ethics community, you don’t always need explainable AI to trust it. You can test it in various ways that prove it’s sufficiently reliable such that it can be used. But in many cases, you need explanations so you can use your AI effectively: imagine a doctor trying to understand why the AI predicts the patient has cancer. In other cases, you need to provide explanations because it’s required by regulations, as when you deny someone a mortgage.

From a straightforward ethical perspective, you may owe people explanations when the decision your organization comes to upon the “recommendation” of an AI harms them in some way. For instance, when deciding who gets a loan, an interview, or insurance, you need an explanation of your AI so you can assess whether the rules that govern the system are good, reasonable, and fair.

You Need to Tailor Your Explanations to Your Various Stakeholders

Data scientists can use software to help them understand what’s going on with – or rather, inside – their AI creations. But not only is this software not always enough for them, but the software gives explanations that only data scientists can understand. Imagine giving the reports intended for data scientists to the layperson who got denied a mortgage – it would be utterly useless.

When solving for black box AI, we need to think about a crucial question: What counts as a good explanation?

The answer will depend on whom you’re giving explanations to. A good explanation for a data scientist is not the same as a good explanation to a layperson, and a good explanation to a layperson may not be a good explanation for a regulator, and none of these may be good explanations for the executive who has to approve deploying the AI.

Your AI ethical risk program, among other things, needs the ability to identify who needs what explanations, when they need them, and how they need them delivered.

The fuel of AI is people’s privacy

AI is trained on data, and often, it’s data about you and me and everyone else.

That means that organizations are incentivized to hoover up as much data as possible and use it to train their AIs, thus violating people’s privacy.

Respecting people’s privacy is about granting them control

All sorts of people talk about privacy, but three loom large: those concerned with regulatory compliance, those concerned with cybersecurity, and those concerned with ethics.

There’s overlap among these things, but when we’re talking about privacy from an ethical perspective, we’re talking about something specific: how much control organizations give to people over their data. Minimally respecting people’s privacy means not undermining their ability to control data about them. Maximally respecting people’s privacy means positively enabling that control.

There’s a lot of data to control

“Artificial intelligence” and “machine learning” are such fancy words. What does it really refer to? Software that learns by example. Yes, it’s that simple: You give your AI software examples – here’s what my dog looks like, here’s what good resumes look like, here are 1000 examples of zebras – and it learns from them. When you give it a new thing, it can tell you whether that new thing is like the other examples you gave it: no, this isn’t your dog, yes, this is a good resume, that thing isn’t a zebra).

And a fancy word for “examples”? Data.

Some of that data is about you. And me. And your family and friends. And just about everyone. And some of that data is personal: your race, religion, and ethnicity. Your medical history, financial profile, and penchant for patent leather.

Maybe your organization wants to create an AI that makes recommendations to its (potential) customers on what to buy. Perhaps it wants to recommend a suite of services. Or maybe it wants to monitor employee productivity. Whatever your company wants to do with AI, it’s going to need a lot of data, and often, a lot of data about people’s actions, histories, plans, and so on. In other words, your organization is highly incentivized to collect as much data about as many people as it can to better train its AI. This is just inviting invasions of privacy.

But the privacy violations don’t stop there! Not only is your organization incentivized to collect data about people, but it’s also trying to make certain predictions about people that amount to violating people’s privacy. Your AI can infer, for instance, that you go to a therapist because, for instance, it has data about where you travel, with what frequency you travel, where you live, where therapists provide services, and….voila! The AI knows that you go to a therapist’s office every Thursday at 3 pm.

The Four Ingredients of Ethical Privacy

There are four main ingredients to ethical privacy every organization needs to think about:

- How transparent you are about what data you collect and infer and what you do with that data

- How much control your data subjects (the people about whom you have data) have over the data you collect and infer

- Whether you assume you have data subjects’ consent to collect and do whatever you want with that data

- Whether the services you offer are expanded or curtailed based on how much data people are willing to give you.

Determining how to include these ingredients in your AI ethical risk program is crucial for robust identification and mitigation of ethical and reputational risks relating to violations of privacy.

How many hundreds of millions of dollars does a brand need to spend to rebuild trust?

AI doesn’t only scale solutions – it also scales risk. The responsible deployment of AI – whether it’s developed in-house or procured from a vendor – requires the means by which organizations can systematically and comprehensively identify and mitigate those risks. Every organization needs to build, scale, and maintain an AI ethical risk program.

Finding the Right Solution is Imperative…But Don’t Rush It

Most senior leaders find AI ethics perplexing. They want to do the right thing, but ethics seems…“squishy”. It’s not clear how to get your hands around it.

Ethical risk is hard to mitigate when you don’t have a good grip on the problems themselves. Unfortunately, most people see the scary headlines around bias, explainability, and privacy violations and immediately want to spring into action. The impulse is admirable but misguided. Slow down. Understand the problem. Then act.

What You’re Going to Need

Once you do understand the many sources of AI ethical risk, you’ll see there are seven areas to focus on.

- Defining your AI ethical standards

- Growing organizational awareness and training

- Writing and updating policies

- Identifying appropriate processes and tools

- Involving appropriate subject matter experts

- Ensuring compliance with your program is supported by appropriate financial (dis)incentives

- Articulating KPIs for tracking success related to scaling and maintaining your AI ethical risk program

Want to Learn More?

I devote a chapter to this in Ethical Machines. You can also read my Harvard Business Review article, “A Practical Guide to AI Ethics.”